TL;DR: Your Quick Guide to NLP

- What is NLP? Natural Language Processing (NLP) is a field of AI that teaches computers to read, understand, and generate human language. It turns unstructured text (emails, tickets, reviews) into structured data your systems can act on.

- Why does it matter? NLP automates text-based work to cut operational costs (e.g., ticket triage), uncover revenue opportunities (e.g., analyzing customer feedback), and improve customer experience (e.g., 24/7 chatbots).

- How does it work? Modern NLP uses pre-trained models called Transformers (like GPT or BERT) that learn statistical patterns from massive text datasets. These models can be fine-tuned on your specific data for tasks like classification or entity extraction.

- What should you do first? Don't start a massive R&D project. Scope a 2-week pilot focused on a high-pain, measurable business problem, like automating support ticket classification or summarizing competitor press releases.

Who This Guide Is For

- CTOs & Heads of Engineering: You need to decide whether to build a custom NLP solution or buy an API, and who to hire for the job.

- Founders & Product Leads: You're scoping the budget, timeline, and business impact for an AI feature powered by NLP.

- Talent Ops & Procurement: You're evaluating the risk and ROI of bringing in specialized AI talent for an NLP project.

This guide is for operators who need to make a decision and see results in weeks, not months.

Quick Answer: The NLP Decision Framework (Build vs. Buy)

One of the first decisions you'll face is whether to build a custom NLP model or use a third-party API from vendors like OpenAI, Google, or Cohere. Use this framework to make the right call.

Our recommendation: For most companies, a hybrid approach is best. Start by "buying" an API to quickly validate your use case. If it proves valuable and you hit limitations, then invest in "building" a custom solution.

2 Practical Examples of NLP in Action

Theory is useless without application. Here are two real-world examples showing how NLP solves specific business problems, including the architecture, team, and expected impact.

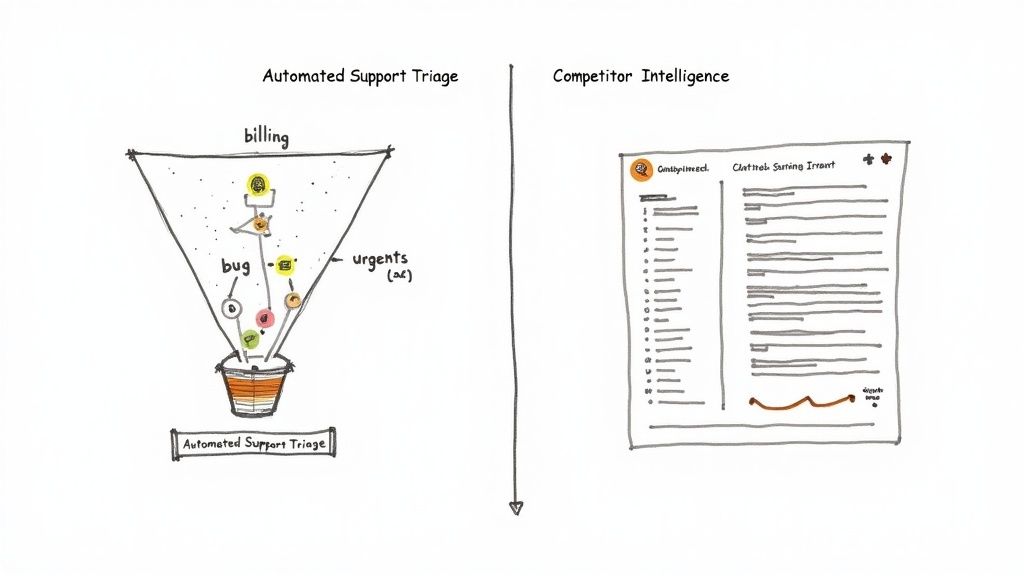

Example 1: Automated Customer Support Ticket Triage

As a company scales, manually sorting support tickets becomes a major bottleneck, slowing down response times and frustrating customers. An NLP triage system automates this process entirely.

Alt text: An architecture diagram showing how NLP is used to triage customer support tickets automatically.

The Business Problem:

A B2B SaaS company with 5,000 customers receives over 1,000 support tickets daily. Agents spend the first 60–90 minutes of each shift manually reading and routing tickets to the correct department (Billing, Tech Support, Sales). This creates a significant delay in first-response time.

The NLP Solution:

Build a classification model that automatically reads each incoming ticket and assigns two tags:

- Intent: "Billing question," "Bug report," "Feature request."

- Priority: "Low," "Medium," "High," "Critical."

The system then integrates with the help desk (e.g., Zendesk) to route the ticket to the right agent queue automatically.

Pilot Project Scope:

- Goal: Achieve >85% classification accuracy on live support tickets.

- Timeline: 4 weeks.

- Team: 1 Senior NLP Engineer, 1 Backend Engineer.

Business Impact:

- Primary Metric: Reduce average first-response time by 30% by eliminating the manual triage queue.

- Secondary Metric: Decrease misrouted tickets by 50%, improving internal efficiency.

Example 2: Competitor Intelligence from Public News

Tracking competitor moves is critical but time-consuming. An NLP pipeline can automate this reconnaissance, turning a flood of news articles into a structured intelligence dashboard.

The Business Problem:

A product marketing team needs to track competitor product launches, partnerships, and market sentiment. Manual scanning of news sites is inefficient and misses key signals.

The NLP Solution:

Create a script that uses Named Entity Recognition (NER) to extract key information from public sources.

- Ingest articles from targeted news APIs or RSS feeds.

- Use a pre-trained NER model to identify and extract mentions of competitor names, products, and key personnel.

- Analyze the sentiment (positive, negative, neutral) surrounding each mention.

- Push the structured data (Entity, Article Source, Date, Sentiment) into a database to power a dashboard in Metabase or Tableau.

Example Python Snippet (using Hugging Face Transformers):

This code shows how to set up a pipeline to extract organizations and products from a block of text.

# A simple configuration to perform Named Entity Recognition (NER)# This snippet uses a pre-trained model to find organizations and products in text.from transformers import pipeline# Load the pre-trained NER pipeline# We use a robust model fine-tuned for entity recognition.ner_pipeline = pipeline("ner",model="dbmdz/bert-large-cased-finetuned-conll03-english",grouped_entities=True)# Example text from a fictional news articlearticle_text = """InnovateCorp announced its groundbreaking new product, the OmniDrive, challenging established players like FutureTech.Analysts suggest the OmniDrive's launch could significantly impact FutureTech's market share."""# Process the text to extract entitiesresults = ner_pipeline(article_text)# Print the extracted entities for review# Expected Output: Identifies 'InnovateCorp' and 'FutureTech' as organizations# and 'OmniDrive' as a miscellaneous entity (product).print(results)This automates the data gathering, allowing the team to focus on analysis and strategy. A similar approach is used when using ChatGPT for SEO to analyze competitor content.

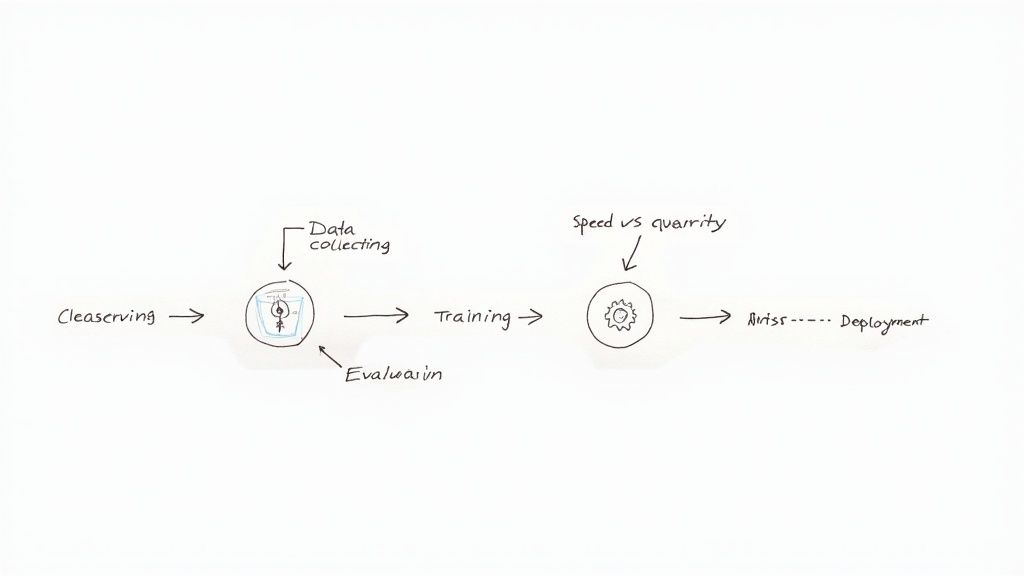

The NLP Project Lifecycle: A 5-Step Deep Dive

Moving an NLP project from idea to production follows a predictable path. Success depends on navigating the trade-offs at each stage, focusing on business value over technical perfection.

Alt text: A flowchart showing the NLP project lifecycle from data collection to deployment.

1. Data Collection & Preparation (The 80% Rule):

This is the most critical and time-consuming phase. Expect to spend up to 80% of your time here. The goal is to create a high-quality, labeled dataset.

- Action: Gather relevant text data (e.g., 1,000 support tickets), clean it (remove HTML, duplicates), and label it with the target outcome (e.g., "Billing").

- Trade-off: A small, clean dataset (1,000 examples) is far more valuable than a massive, noisy one (100,000 examples).

2. Model Selection & Training:

Choose the right tool for the job. For most business cases, this means fine-tuning a pre-trained Transformer model like BERT or an open-source variant of GPT.

- Action: Feed your labeled data into the model to teach it the specific patterns of your business problem.

- Trade-off: A smaller, fine-tuned model (e.g., DistilBERT) can be faster and cheaper to run than a massive one (e.g., GPT-4), often with comparable accuracy for specific tasks.

3. Evaluation:

Measure the model's performance against business goals, not just academic metrics. The key is understanding the cost of errors.

- Action: Use metrics like precision and recall to assess performance.

- Trade-off: For fraud detection, you prioritize recall (catch every possible fraud case, even if you have some false alarms). For a product recommender, you prioritize precision (make sure recommendations are highly relevant, even if you miss a few good ones).

4. Deployment:

Package the trained model and expose it as an API so other applications can use it.

- Action: Deploy the model in a cloud environment (AWS, GCP, Azure) and ensure it can handle production traffic.

- Trade-off: A serverless deployment might be cheaper for low-traffic applications, while dedicated instances provide lower latency for high-throughput needs.

5. Monitoring & Maintenance (MLOps):

Models degrade over time as real-world data changes ("model drift"). You need a plan to monitor performance and retrain the model periodically.

- Action: Set up alerts to track accuracy and data distributions. Plan for quarterly or semi-annual retraining cycles.

- Trade-off: Frequent retraining ensures high accuracy but increases operational cost. Find the right cadence for your use case. This process is a core part of MLOps, which you can learn more about if you plan to hire remote AI developers.

NLP Project Checklist for CTOs & Founders

Use this checklist to de-risk your first NLP project and ensure it's set up for success from day one.

Phase 1: Scoping & Planning (Week 1)

- Define Business Problem: Clearly state the problem in terms of business metrics (e.g., "Reduce ticket response time by 30%").

- Set Success Criteria: Define a specific, measurable pilot goal (e.g., "Achieve 85% classification accuracy").

- Confirm Data Availability: Do you have at least 500–1,000 labeled examples to start with? If not, what is the plan to get them?

- Make Build vs. Buy Decision: Use the framework above. Is an API sufficient for the pilot?

- Identify Team: Assign 1-2 engineers (NLP/Backend) to own the pilot.

Phase 2: Execution (Weeks 2-3)

- Establish Data Pipeline: Set up a script to clean and prepare the initial dataset.

- Train Initial Model: Fine-tune a pre-trained model (PyTorch or TensorFlow) on the pilot data.

- Evaluate Against Baseline: How does the model perform compared to the current manual process?

- Iterate: Analyze errors and retrain the model with corrected or additional data.

Phase 3: Pilot Review & Next Steps (Week 4)

- Review Pilot Results: Did you meet the success criteria defined in Phase 1?

- Estimate ROI: Project the cost savings or revenue impact if the solution were fully rolled out.

- Develop Production Plan: Outline the steps needed to move from pilot to a fully monitored, production-ready system.

- Go/No-Go Decision: Make a clear decision to either scale the project, pivot, or stop.

What to Do Next

You now have a practical framework for understanding and implementing Natural Language Processing. The key is to move from theory to action quickly.

- Identify One High-Impact Bottleneck. Look for a repetitive, text-heavy process in your operations (support, sales, marketing) that is slowing your team down.

- Scope a 2-Week Pilot. Define a clear goal and success metric. Frame it as a low-risk experiment, not a massive engineering project. Preparing the right questions for your artificial intelligence project is key.

- Book a Scoping Call. Don't go it alone. A 20-minute call with an NLP expert can de-risk your pilot and accelerate your timeline. We connect you with senior engineers who have shipped real-world NLP systems.

Start Your NLP Pilot in 2 Weeks

References

- Foundation of Modern NLP: The Transformer architecture was introduced in the paper "Attention Is All You Need" by Vaswani et al. (2017).

- Historical Context: For a deep dive into the field's history, see Foundations of Statistical Natural Language Processing.

- State-of-the-Art Models: Read about the latest large language models like Anthropic's Claude 3.

- Related ThirstySprout Guides: For more on implementation, see our guide to what is AI automation.

Hire from the Top 1% Talent Network

Ready to accelerate your hiring or scale your company with our top-tier technical talent? Let's chat.