TL;DR

- Prioritize Risk: Don’t secure everything equally. Use a framework that ties security controls directly to data sensitivity (PII, PHI) and business criticality (impact of loss). Focus your budget on protecting your most valuable assets.

- Build in Layers: A single control will fail. Implement a defense-in-depth architecture using network segmentation (VPCs), strict Identity and Access Management (IAM), and end-to-end encryption (at rest, in transit).

- Automate Everything: Manual security doesn’t scale. Automate data discovery and classification, use policy-as-code for access controls, and set up automated alerts in a SIEM for suspicious activity like dormant account access.

- Actionable Next Step: Use the checklist below to score your current data platform. If you score below 70%, your immediate priority should be a threat modeling session for your crown-jewel data assets.

Who this guide is for

This guide is for technical leaders who need to make sound decisions about securing large-scale data platforms within the next quarter.

- CTO / Head of Engineering: You need a risk-based framework to justify security investments and ensure your architecture is defensible.

- Founder / Product Lead: You're scoping AI features and need to understand the security overhead and risks of handling sensitive training data.

- Staff Engineer / Architect: You are responsible for designing and implementing the technical controls for data lakes, warehouses, and ML pipelines.

The 4-Step Framework for Securing Big Data

Securing a sprawling data environment feels overwhelming because teams often start with tools instead of a strategy. A successful program connects every security action back to a specific business risk, ensuring your resources are focused on protecting what actually matters.

Here is the step-by-step framework we use to build defensible data platforms:

- Assess and Classify: You can't protect what you don't know you have. Start by using automated tools to scan your data stores (S3, BigQuery, Snowflake) to discover and tag sensitive data like PII and PHI. This creates a data inventory map.

- Model Threats: Think like an attacker. For your most critical data assets (e.g., customer data lake, ML training sets), run a threat modeling exercise using the STRIDE model (Spoofing, Tampering, Information Disclosure, etc.). This turns vague risks into a concrete list of vulnerabilities to fix.

- Implement Layered Controls: Build a multi-layered defense. Isolate systems using network segmentation (VPCs), enforce least-privilege with granular IAM roles, and encrypt all data at rest (KMS) and in transit (TLS 1.2+).

- Monitor and Respond: Make security an active operation. Funnel all access and API logs into a central SIEM. Set up automated alerts for high-signal events (e.g., access from an unusual location) and have a documented incident response playbook ready.

This risk-based approach moves you away from an expensive and ineffective "secure everything equally" mindset to one of smart, targeted prioritization.

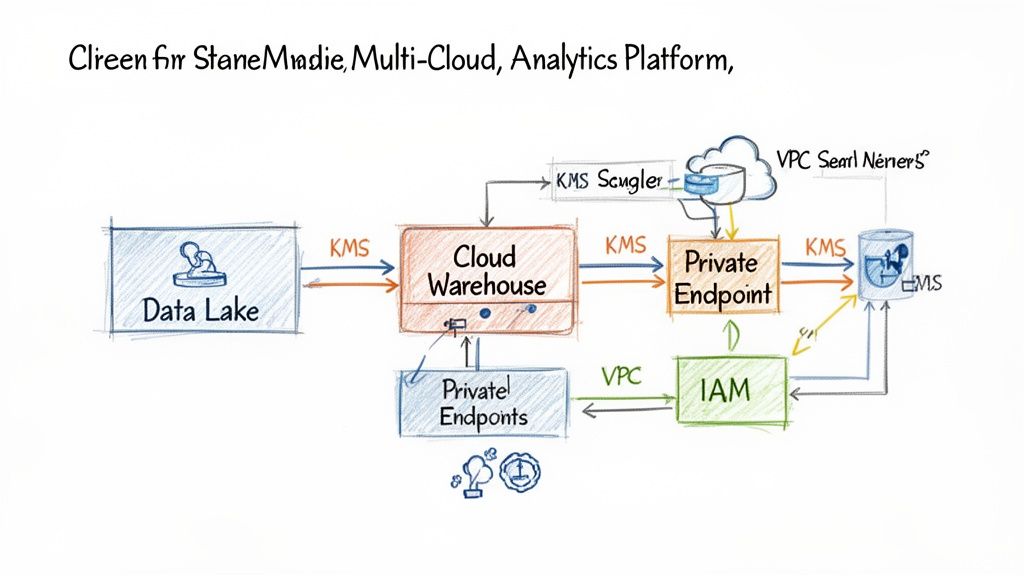

Practical Example 1: Securing a Multi-Cloud Analytics Platform

A common challenge is securing data that moves between cloud providers, such as from an application on GCP to an analytics warehouse on AWS.

Architecture:

- Ingestion: Data lands in a dedicated Google Cloud Storage bucket within an isolated VPC. A service account with write-only permissions handles this.

- Transit: An encrypted VPN tunnel or private link connects the GCP VPC to an AWS VPC. All traffic is forced through this channel.

- Processing: In AWS, a Spark job in a separate processing VPC reads the raw data, cleanses it, and writes the curated data to an Amazon S3 bucket. The Spark cluster's IAM role has read access to the raw bucket and write access to the curated bucket—nothing more.

- Analytics: Snowflake, running in its own VPC, accesses the curated data via a private endpoint. All data is encrypted at rest using a customer-managed key (CMK) in AWS KMS.

Business Impact: This segmented architecture reduces the risk of lateral movement. A compromise in the ingestion zone doesn't grant an attacker access to the production data warehouse, directly reducing the potential cost and reputational damage of a breach.

Practical Example 2: Dynamic Data Masking in Snowflake

You need to allow BI analysts to query customer data without exposing sensitive PII like email addresses. Dynamic data masking applies a policy at query time.

Configuration Snippet (SQL):

-- Create a masking policy to redact emails for non-privileged rolesCREATE OR REPLACE MASKING POLICY email_mask AS (val STRING)RETURNS STRING ->CASEWHEN CURRENT_ROLE() IN ('PII_VIEWER') THEN valELSE '***-REDACTED-***'END;-- Apply the policy to the customer email columnALTER TABLE customersMODIFY COLUMN emailSET MASKING POLICY email_mask;Outcome: An analyst with a standard BI_USER role who runs SELECT email FROM customers will see a column of ***-REDACTED-***. A user in the PII_VIEWER role sees the actual email addresses. The underlying data is never changed.

Business Impact: This control allows for wider data access for analytics while minimizing the risk of a PII leak, enabling data democratization without sacrificing compliance with regulations like General Data BProtection Regulation (GDPR).

Deep Dive: Trade-offs and Common Pitfalls

Implementing a robust security program involves navigating critical trade-offs.

- Performance vs. Security: Encrypting data in use with confidential computing provides the highest level of protection but can introduce performance overhead. For most workloads, encryption at rest and in transit offers the best balance.

- Usability vs. Control: Overly restrictive IAM policies can frustrate developers and analysts, leading them to find insecure workarounds. The key is to automate role creation and provide clear, self-service paths for requesting temporary, elevated access when needed.

- Cost of Tools vs. Risk of Breach: Advanced security tools (SIEMs, automated discovery scanners) require budget. However, the average cost of a data breach is now over $4 million. The ROI on security tooling should be framed around risk reduction, not just feature sets.

Common Pitfalls to Avoid:

- Ignoring Service Accounts: Teams often focus on user permissions and forget about the service accounts used by applications and automation. These are prime targets for attackers and must be locked down with least-privilege permissions.

- "Set and Forget" IAM: IAM is not a one-time setup. You need a process for regularly reviewing and pruning permissions, especially for users who have changed roles or left the company.

- Neglecting Logs: Not collecting or monitoring access logs is like flying blind. A lack of visibility makes it impossible to detect a breach in progress or conduct a forensic investigation after the fact.

To get this right, you have to lean on fundamental general security architecture principles to build that robust foundation.

Big Data Security Checklist

Use this checklist to perform a quick health check of your current big data security posture. Score one point for each "Yes."

Scoring:

- 8–10: Excellent. You have a mature, proactive security program.

- 5–7: Solid foundation, but there are critical gaps to address, likely in automation and operational response.

- 0–4: High risk. You are missing fundamental controls and need to prioritize this work immediately.

What to do next

- Run the Checklist: Complete the self-assessment checklist above with your team this week to identify your biggest gaps.

- Schedule Threat Modeling: If you scored below 7, book a 2-hour threat modeling session focused on your single most valuable data asset.

- Build a 90-Day Roadmap: Use the output of the session to create a prioritized backlog of security tasks. Focus on IAM and encryption first.

Building a team with the unique blend of cloud, data, and security skills is one of the biggest challenges in this space. At ThirstySprout, we specialize in connecting you with elite, pre-vetted AI, data, and security engineers who can implement these controls from day one. Start a pilot with an expert today.

References

Hire from the Top 1% Talent Network

Ready to accelerate your hiring or scale your company with our top-tier technical talent? Let's chat.