TL;DR

- Adopt Zero Trust: Never trust, always verify. Use micro-segmentation, granular access controls, and continuous verification. Stop treating your network like a castle with a moat.

- Prioritize with Threat Modeling: Use a framework like STRIDE to map threats (data poisoning, tampering, disclosure) to each stage of your data lifecycle—ingestion, processing, storage, and access.

- Encrypt Everything, Everywhere: Encrypt data both in-transit (TLS) and at-rest (using a KMS like AWS KMS or Google Cloud KMS). Automate key rotation every 90–365 days.

- Monitor Continuously: Centralize all logs (CloudTrail, S3 access, application logs) into a SIEM. Create high-fidelity alerts for specific threats like unusual data access or large data egress.

Who this is for

- CTO / Head of Engineering: Needs to design a secure big data architecture and make build-vs-buy decisions for security tooling.

- Founder / Product Lead: Needs to understand the business risks and costs associated with big data security to scope budgets and timelines.

- Data/MLOps Engineer: Needs practical steps for implementing encryption, access control, and monitoring in a production environment.

A 3-Step Framework to Secure Your Big Data Platform

Securing a big data stack isn't about bolting on a firewall at the end. It must be woven into your architecture from day one, grounded in a Zero Trust mindset. A single misconfiguration can expose petabytes of data, and with the average cost of a data breach climbing, a robust security posture is a core business requirement, not just an IT task.

This framework provides a repeatable process for protecting your most valuable data assets.

Step 1: Map Your Threats. You can't protect against threats you haven't identified. Start by building a practical threat model for your data pipelines.

Step 2: Design a Secure Architecture. Implement Zero Trust principles. Focus on layered defenses, strong encryption, and granular access control.

Step 3: Monitor and Respond. Continuously monitor for anomalies. Have a clear, rehearsed plan to contain and eradicate threats when they occur.

Practical Examples of Big Data Security

Theory is good, but execution is better. Here are two real-world examples of applying this framework to common big data scenarios.

Example 1: Securing a Batch ETL Pipeline for Financial Data

A fintech company runs a nightly job pulling customer transaction data from a production database, processing it with Spark, and loading it into a cloud data warehouse for analytics.

- Spoofing: An attacker steals a service account credential and connects to the production database, impersonating the ETL job.

- Tampering: A malicious actor with access to the Spark cluster modifies the transformation code to skim fractions of a cent from each transaction.

- Information Disclosure: A developer accidentally configures the S3 bucket holding raw data with a public-read policy, exposing all customer PII.

- Access Control: Use short-lived, temporary credentials for the ETL service account. Implement strict IAM policies allowing access only from the specific ETL compute environment.

- Encryption: Encrypt the raw data in S3 using a customer-managed key in AWS KMS. Ensure TLS is enforced for all data in transit between the database, Spark, and the data warehouse.

- Monitoring: Create a high-fidelity alert in your SIEM that triggers if the ETL service account is used outside its expected geographic region or time window (e.g., 2-4 AM).

alt text: Diagram showing three data security steps: Granular Access, Data Encryption, and Continuous Monitoring, illustrating a cyclical security process.

Example 2: Securing a Real-Time IoT Sensor Platform

A manufacturing company ingests live data from thousands of factory-floor IoT devices to detect production anomalies.

- Denial of Service (DoS): An attacker floods the ingestion endpoint (e.g., Kafka or Kinesis) with junk data, overwhelming the system and blocking legitimate sensor data.

- Information Disclosure: IoT devices transmit data over the local network without TLS encryption, allowing an attacker on the same network to intercept and read sensitive operational data.

- Elevation of Privilege: A vulnerability in the stream processing application allows an attacker to execute arbitrary code, giving them control of the processing cluster to access other data streams.

- Ingestion Security: Use client certificate authentication for each IoT device connecting to the ingestion endpoint. Implement rate limiting to prevent DoS attacks.

- Encryption: Mandate TLS 1.2+ for all data in transit from devices to the cloud. Use a separate encryption key for each data stream to limit the blast radius of a key compromise.

- Code Security: Integrate static application security testing (SAST) into the CI/CD pipeline for the stream processing application to catch vulnerabilities before they are deployed.

Deep Dive: Trade-Offs, Pitfalls, and Best Practices

Building a secure platform involves making critical decisions with long-term consequences. Here’s a deeper look at the trade-offs you'll face.

Designing a Secure Big Data Architecture

"Bolting security on later" is a recipe for failure. It's more expensive, less effective, and creates a management nightmare. The goal is to shrink your attack surface from the start by being deliberate about data flows, storage, and access.

alt text: Hand-drawn sketch of a data processing system with a central element and interconnected external nodes, emphasizing data flow security.

Adopting a Zero Trust Mindset

The old castle-and-moat security model is obsolete. Your data is distributed, and your users are remote. A Zero Trust architecture is now a necessity.

The core principle is simple: never trust, always verify. Every request to access data must be authenticated and authorized, regardless of its origin. This translates into concrete actions:

- Micro-segmentation: Isolate data processing clusters and storage zones. A breach in one segment shouldn't grant access to the entire environment.

- Granular Access Control: Base access decisions on user identity, device health, location, and the specific data requested.

- Continuous Verification: Don't rely on a single login event. Continuously re-verify credentials and permissions throughout a user's session.

A Zero Trust model shifts your focus from protecting the network to protecting the data itself. It assumes a breach is a matter of when, not if, and builds defenses to contain the blast radius. You can learn more about Zero Trust security principles.

Pitfall: Choosing the Wrong Access Control Model

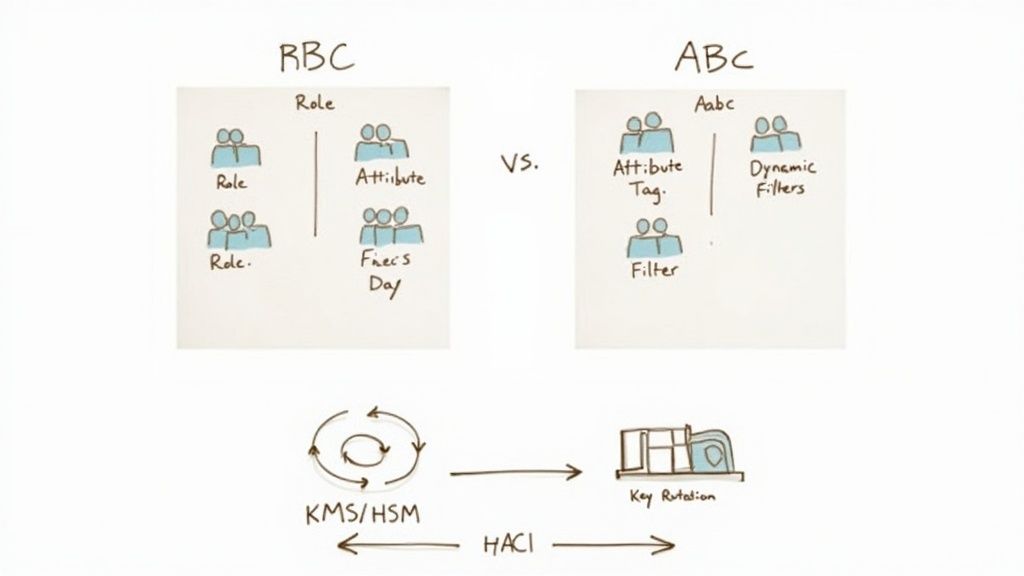

Controlling who can access data is as important as protecting what they see. The two main models are Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC). Your choice depends on your environment's complexity.

Role-Based Access Control (RBAC) is simple and predictable. You define roles like "DataAnalyst" and assign permissions. This works well for smaller teams with clear access patterns.

Attribute-Based Access Control (ABAC) is dynamic and granular. Access is granted by evaluating attributes of the user (e.g., department), resource (e.g., data classification), and environment (e.g., time of day). ABAC is the foundation for a true Zero Trust model at scale.

RBAC vs. ABAC Decision Rubric

RBAC is a good start, but as your data ecosystem grows, a shift toward ABAC becomes almost inevitable to maintain security without drowning in role management.

alt text: Diagram comparing Role-Based (RBAC) and Attribute-Based (ABAC) access control models, with supporting technologies like KMS/HSM and key rotation shown.

Best Practice: Centralize Logs for Continuous Monitoring

You must assume a breach will happen. Your goal is to shrink the "dwell time"—the gap between intrusion and detection. To do this, you need to centralize logs from your entire stack into a Security Information and Event Management (SIEM) system like Splunk, Datadog, or Microsoft Sentinel.

Funnel logs from these critical sources:

- Cloud Provider APIs: AWS CloudTrail, Google Cloud Audit Logs.

- Data Access: S3 access logs, BigQuery audit logs.

- Applications: Logs from data processing engines like Apache Spark.

With centralized logs, you can establish a baseline for normal activity and create high-fidelity alerts that minimize false positives. A good alert is context-aware: "A user from marketing, who has never accessed the finance data warehouse, is attempting to download 10 GB of data at 3 AM." This ties a technical event directly to business risk, making the response priority clear. Managing these automated systems requires discipline, and many frameworks from MLOps best practices are applicable here.

Finally, remember that the data lifecycle ends with secure disposal. Follow established frameworks like the NIST SP 800-88 data sanitization standards to ensure retired data is irrecoverable.

Big Data Security Checklist

Use this checklist to self-assess your security posture and build a practical roadmap for improvement.

Threat Modeling & Risk Assessment

- Have we identified and inventoried our most critical data assets (e.g., PII, financial records)?

- Do we have a documented threat model for our primary data pipelines?

- Is the threat model updated automatically when new data sources or tools are added to our stack?

Architecture & Design

- Does our architecture enforce Zero Trust principles (e.g., micro-segmentation)?

- Is all data in transit between services encrypted with strong TLS?

- Is sensitive data in non-production environments properly masked or tokenized?

Encryption & Key Management

- Is 100% of our data encrypted at rest in our data lake and data warehouse?

- Do we use a centralized Key Management System (AWS KMS, Google Cloud KMS)?

- Have we automated key rotation on a fixed schedule (e.g., every 365 days)?

Access Control & Identity

- Do we enforce the principle of least privilege for all user and service accounts via tools like AWS IAM?

- Do we have a quarterly review process to remove unused permissions and accounts?

- Is multi-factor authentication (MFA) mandatory for all administrative access?

Monitoring & Incident Response

- Are all critical logs (e.g., CloudTrail, data access, application) centralized in a SIEM?

- Have we configured high-fidelity alerts for high-risk activities (e.g., large data egress, risky IAM changes)?

- Do we have a documented and rehearsed incident response plan?

What to Do Next

- Run a Data Discovery Audit. Use an automated tool to scan your primary data stores (e.g., S3, BigQuery) to find and tag sensitive data. You can't protect what you don't know you have.

- Audit Your Top 5 IAM Roles. Identify the five most powerful IAM roles in your cloud environment. Scrutinize their permissions and remove any that are not strictly necessary. This single action can dramatically reduce your blast radius.

- Validate Your Log Pipeline. Verify that critical logs from your key data sources are flowing correctly into your SIEM. A gap in logging is a critical blind spot during an incident.

Building and maintaining a secure data platform requires specialized talent. The process of information security recruitment can be a significant roadblock. Whether you need a Data Security Engineer for a 6-month project or a full-time Cloud Security Architect, ThirstySprout connects you with vetted experts who can start delivering value in weeks, not months.

References

- Apache Kafka - Distributed streaming platform.

- Apache Spark - Unified analytics engine for large-scale data processing.

- Big Data Security Market Research - Market growth projections and analysis.

- NIST SP 800-88 Data Sanitization Standards - Guidelines for secure data disposal.

- ThirstySprout: Interview Questions for AI Roles - Find engineers with a security-first mindset.

Finding talent with the rare mix of security and data engineering expertise is often the hardest part of the puzzle. At ThirstySprout, we specialize in connecting companies with vetted, remote AI and data experts who can build the secure, scalable platforms you need. Start a pilot with a pre-vetted expert in days, not months.

Hire from the Top 1% Talent Network

Ready to accelerate your hiring or scale your company with our top-tier technical talent? Let's chat.