TL;DR

- Choose the Right Leadership Model: Use a "Player-Coach" for small teams (2–7 engineers) and a "Servant Leader" for larger, mature teams (8+ engineers) to avoid bottlenecks and guidance gaps.

- Structure for Impact: Organize teams into small, cross-functional "pods" for specific features (like an AI startup) or dedicated "platform teams" (like MLOps) to support multiple squads at scale.

- Measure What Matters (DORA): Track Deployment Frequency, Lead Time for Changes, Mean Time to Recovery (MTTR), and Change Failure Rate. These metrics connect engineering performance directly to business speed and stability.

- Manage Technical Debt Proactively: Allocate a fixed budget (e.g., 20% of each sprint) to address technical debt. Frame this work in terms of business impact, like "reducing future outage risk by 50%."

Who This Guide Is For

This guide is for technical leaders who must deliver results in weeks, not months.

- CTO / Head of Engineering: You need to structure your teams, manage technical debt, and connect engineering output to business goals.

- Founder / Product Lead: You are scoping roles, setting budgets for new features (especially AI), and need a predictable delivery engine.

- Talent Ops / Procurement: You're evaluating vendors and need to understand the building blocks of a high-performing remote AI team.

If you're trying to ship faster, improve quality, and make data-driven management decisions, this is your playbook.

A Modern Framework for Software Management

Effective management in software development isn't about top-down control. It's about building an ecosystem where smart people ship valuable software, fast. An effective framework balances strategic alignment with the autonomy engineers need to solve problems.

This means moving beyond simply "doing Agile" to defining how you lead, deliver, and maintain systems. It starts with choosing the right leadership model for your team's current stage.

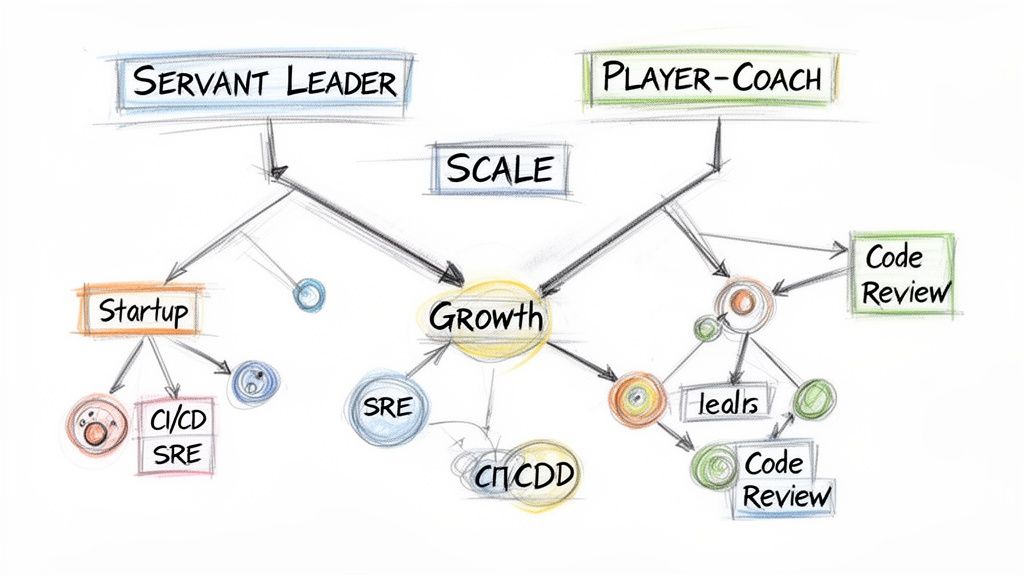

This diagram shows how leadership styles must adapt as a team scales, moving from hands-on coaching to strategic support.

Step 1: Choose Your Leadership Model

The wrong leadership style creates immediate friction. A servant leader on a junior team creates a guidance vacuum, while a player-coach on a large team becomes a bottleneck.

- The Player-Coach: This leader is still in the code, contributing to architecture, code reviews, and mentoring. This model is essential for small teams (2–7 engineers) where deep technical expertise is needed to establish sound practices.

- The Servant Leader: This manager's job is to remove blockers, secure resources, and protect the team from distractions. This is ideal for mature teams (8+ engineers) where individuals are self-sufficient and the primary challenge is large-scale coordination.

Step 2: Implement Disciplined Delivery Processes

With the right leader in place, focus shifts to the mechanics of shipping software. For modern teams, especially those working with AI, two practices are non-negotiable.

- Continuous Integration/Continuous Deployment (CI/CD): This automates your build, test, and deployment pipeline. For AI/ML, a robust CI/CD process validates changes to application code, models, and data, drastically reducing manual errors.

- Site Reliability Engineering (SRE) Practices: SRE applies an engineering mindset to system operations. It starts with defining Service Level Objectives (SLOs)—clear targets for system performance (e.g., API response time <200ms). SLOs create error budgets, giving teams the autonomy to balance new features with stability work.

This combination creates a predictable, scalable engine for building software. For a deeper dive, see our guide on project management for software engineering.

Practical Examples: Structuring Your Engineering Teams

Let's move from theory to practical blueprints. Here are two real-world team structures you can adapt for your company: a lean startup pod and a scale-up platform team.

Example 1: The Lean AI Startup Pod

Scenario: You're a Seed-stage startup building a Retrieval-Augmented Generation (RAG) feature. You need to move fast and stay focused. A small, cross-functional "pod" is the answer.

Team Composition:

- 1 Senior AI Engineer: Owns the core tech, from vector database selection to prompt engineering.

- 1 Full-Stack Engineer: Builds the UI and APIs connecting the front-end to the RAG service.

- 1 Fractional AI Product Manager (PM): Defines scope and prioritizes work to ensure you're building something customers will pay for.

This tight structure keeps communication simple and focuses the entire team on a single business goal. You can learn more about setting up these units in our guide on cross-functional team building.

Interview Question for the Senior AI Engineer Role:

"Walk me through how you would design and deploy a proof-of-concept RAG system for a knowledge base with 50,000 documents. What's your proposed stack, how would you chunk the documents, and what three metrics would you track to measure its quality in the first 30 days?"

Example 2: The Scale-Up MLOps Platform Team

Scenario: Your company is at Series C with multiple product squads deploying ML models. To avoid chaos and duplicated work, you create a centralized MLOps platform team. Their job is to build the "paved road"—the infrastructure, tools, and processes—that lets other teams ship ML models quickly and safely.

Team's Mandate (defined by SLOs):

The MLOps team's success is measured by the reliability and efficiency of their platform. They make a pact with the product squads using Service Level Objectives.

If an SLO is breached, all new platform feature development stops. The team's entire focus shifts to reliability work until the objective is met. This enforces a culture of stability.

A well-run platform team is a force multiplier. It frees up your product squads to focus on building user-facing features, not fighting with Kubernetes configurations. Building such teams requires strong leadership, often developed through programs like Executive Coaching and Leadership Training to Build High Performing Teams.

Deep Dive: Managing Technical Debt and Measuring What Matters

Technical debt is the invisible drag on your team's velocity. Effective management in software development requires a system to find, categorize, and strategically pay it down. At the same time, you must measure outcomes, not activity (like lines of code).

Taming Technical Debt

Not all debt is equal. The first step is to categorize it.

- Code Debt: Messy code, poor test coverage, complex modules.

- Architectural Debt: An outdated monolith preventing independent deployments.

- Infrastructure Debt: Unpatched servers, a slow CI/CD pipeline, poor monitoring.

- Knowledge Debt: Critical system knowledge lives only in one person's head.

Once categorized, use a simple scorecard to prioritize fixes. This turns a subjective debate into an objective conversation. For more, see our guide on how to reduce technical debt.

This structure allows each pod to own its DORA metrics and manage its technical debt, aligning engineering efforts with product goals.

Shifting to Outcome-Based Metrics (DORA)

The gold standard for measuring engineering performance comes from the DevOps Research and Assessment (DORA) group. These four metrics provide a balanced view of your team's throughput and stability.

- Deployment Frequency: How often do you release to production? (Elite teams: on-demand, multiple times a day)

- Lead Time for Changes: How long from commit to production? (Elite teams: <1 day)

- Mean Time to Recovery (MTTR): How long to restore service after an incident? (Elite teams: <1 hour)

- Change Failure Rate: What percentage of deployments cause a failure? (Elite teams: <15%)

Tracking these metrics together prevents teams from chasing speed at the expense of quality. For more on this topic, explore a comprehensive playbook for managing technical debt.

Checklist: Your Actionable Management Improvement Plan

Use this checklist to run a health check on your engineering organization. The goal is to start honest conversations that lead to tangible improvements.

People & Team Health

- Role Clarity: Does every engineer have a written description of their role and success metrics?

- Onboarding: Is your process for remote AI talent documented and designed to make new hires productive within 90 days?

- Feedback Loops: Are 1-on-1s (weekly/bi-weekly) and performance reviews (quarterly/semi-annually) consistently held?

- Career Growth: Can every engineer articulate their career path for the next 12–18 months?

Process & Delivery Pipeline

- Agile Ceremonies: Do sprint planning, stand-ups, and retrospectives produce actionable outcomes?

- Release Pipeline: Is your CI/CD pipeline fully automated from commit to production?

- Code Review Quality: Is there a documented standard for code reviews, with feedback delivered in <24 hours?

- Incident Response: Do you have a documented process for handling incidents, including a blameless post-mortem?

Technology & Developer Experience

- Local Setup: Can a new engineer get their local dev environment running in <1 day?

- Toolchain Evaluation: Have you recently reviewed your core dev tools (IDE, source control, etc.) to ensure they still fit your needs?

- Documentation Access: Is technical documentation centralized, searchable, and up-to-date?

- Test Environments: Can developers spin up isolated test environments on demand?

Metrics & Business Impact

- DORA Metrics: Are you tracking all four key metrics (Deployment Frequency, Lead Time, MTTR, Change Failure Rate)?

- Technical Debt: Do you have a system for tracking and a process for paying down debt in each sprint (e.g., 20% rule)?

- Business Alignment: Can every engineer connect their current work to a company-level OKR?

What to Do Next

Knowing is not doing. The secret to making real change is to start small and build momentum.

- Run a 30-Minute Self-Audit: Use the checklist above to get an honest assessment of where you stand right now.

- Identify Your #1 Bottleneck: What single problem is causing the most friction? A slow hiring process for AI specialists? Unreliable deployments? Find the biggest pain point.

- Book a No-Strings-Attached Scoping Call: Schedule a 20-minute call with us. We'll help you map out the problem and define a solution. We specialize in connecting companies with vetted, senior AI talent to tackle your biggest challenges. You can launch a pilot project in just 2–4 weeks.

Frequently Asked Questions

Here are no-nonsense answers to common questions about management in software development.

How do you manage performance for a remote AI team?

Stop tracking "time at keyboard" and focus on outcomes. Use OKRs for quarterly goals and well-scoped user stories for sprints. Measure team-level DORA metrics and concrete individual wins (e.g., "shipped feature X," "cut model inference latency by 15%"). Use consistent 1-on-1s and strong asynchronous communication habits to build trust and accountability.

When should a startup hire a dedicated engineering manager?

When the founder or CTO spends over 30% of their week on people management (1-on-1s, hiring, reviews). This usually happens when the team grows beyond 5–8 engineers. Your first hire should be a "player-coach"—someone still technically credible who can mentor the team but whose primary job is managing people and processes.

How do you balance new features with technical debt?

Make paying down debt a non-negotiable part of your process. A battle-tested approach is the 20% rule: allocate about one day a week, or 20% of every sprint, to refactoring, updating dependencies, or improving tests. Frame this work in business terms: "Investing 20% now to upgrade this library will let us build future payment features 30% faster and prevent a likely outage next quarter."

Ready to fix the real bottleneck in your development process? ThirstySprout helps you hire the vetted, senior AI and software engineering talent you need to accelerate your roadmap.

Start a Pilot

Hire from the Top 1% Talent Network

Ready to accelerate your hiring or scale your company with our top-tier technical talent? Let's chat.