TL;DR: What is Prompt Engineering?

- Prompt engineering is the process of designing, refining, and testing instructions (prompts) to get reliable, accurate, and safe outputs from Large Language Models (LLMs). It's the technical discipline of communicating with AI.

- Key components of a prompt include: the core instruction, context, examples (few-shot), a defined persona, output format (like JSON), and constraints (rules or guardrails).

- Essential techniques include: Zero-Shot (direct command), Few-Shot (learning by example), and Chain-of-Thought (showing step-by-step reasoning) to improve accuracy on complex tasks.

- For business impact: Well-engineered prompts reduce AI errors ("hallucinations"), improve user experience with consistent outputs, and cut development costs by making iteration faster than model fine-tuning.

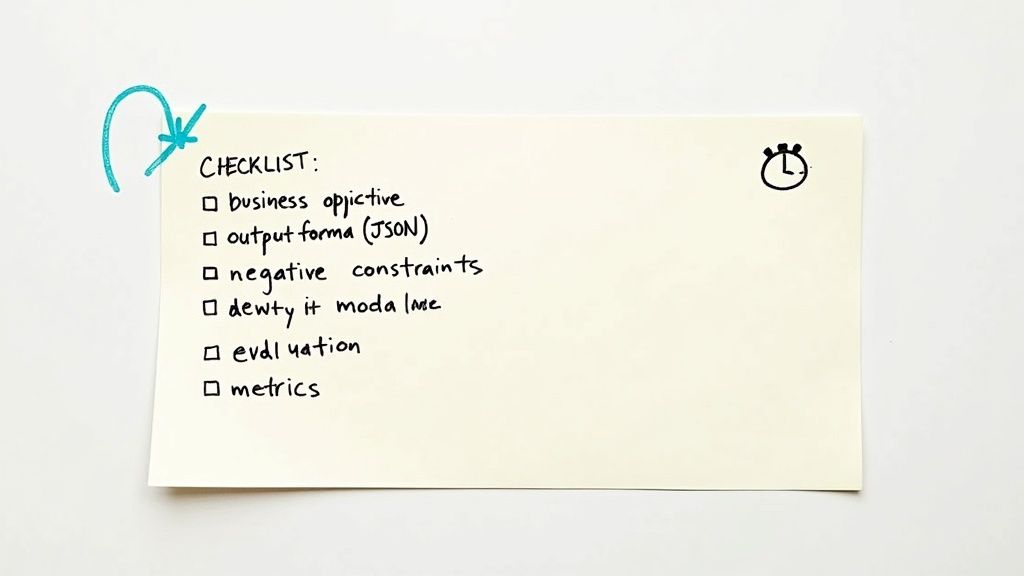

- Actionable next step: Use a structured checklist to ensure your prompts are built for production. Define the business objective, specify the output format, add examples, and establish clear metrics for success.

Who This Guide Is For

This guide is for technical leaders and product managers who need to build reliable AI features and assemble the right team to do it.

- CTO / Head of Engineering: You're responsible for the technical strategy and need to decide when to prompt, when to fine-tune, and how to build a team that can deliver production-grade AI.

- Founder / Product Lead: You're scoping AI-powered features and need to understand the practical effort required to move from a cool demo to a reliable product that delivers business value.

- Hiring Manager / Talent Ops: You're tasked with finding and vetting AI talent and need to distinguish between hobbyists and professional engineers who can build robust systems.

The Engineering Discipline Behind AI Conversations

Prompt engineering is the process of crafting the right instructions to guide Large Language Models (LLMs) toward accurate and reliable outputs. Think of an LLM as a genius intern who has read the entire internet but has zero context about your business. Your prompt is the creative brief that turns their raw potential into a focused tool.

Without well-engineered prompts, an LLM gives vague, irrelevant, or incorrect answers. For technical leaders, this isn't just a technical issue—it's a product problem that directly impacts user experience, brand reputation, and your bottom line.

Alt text: Hand-drawn diagram illustrating a tuning process with gear icons, leading to improved speed, accuracy, and safety, with reliable output.

A well-crafted prompt is the difference between a frustrating gimmick and a genuinely useful AI feature. The business impact is immediate:

- Fewer AI errors: Precise prompts reduce "hallucinations"—when the model confidently states something untrue. This is critical for applications where accuracy matters.

- Improved user experience: Consistent, high-quality responses build trust and user reliance on your AI features.

- Faster, cheaper development: Iterating on a prompt is almost always more cost-effective than retraining or fine-tuning an entire model. You ship better features, faster.

The Core Components of a Prompt

This table breaks down the fundamental building blocks of a professional prompt. Blending these components is how you build sophisticated, reliable AI systems.

Practical Examples of Prompt Engineering

Theory is useful, but seeing prompts in action demonstrates their impact on product performance and operational efficiency. Here are two real-world examples.

Example 1: Transforming a Customer Support Chatbot

Many teams start with a simple, one-line prompt that fails in production. Vague instructions force the model to guess, resulting in generic, unhelpful answers that tank customer satisfaction and create more work for human agents.

Before: A Vague, Low-Impact Prompt

An initial prompt for a support bot often looks like this:

Answer the customer's question about their order.

This prompt lacks a persona, context on tone, and guidance on what to do if the answer isn't available. The result is an unreliable and unprofessional interaction.

After: An Engineered, High-Impact Prompt

By applying prompt engineering principles, we create instructions the AI can follow reliably.

Prompt Template:

You are "SproutBot," a friendly and helpful customer support assistant for a SaaS company. Your goal is to resolve customer issues efficiently and politely.

**Instructions:**1. Adopt a patient and professional tone.2. Use the provided **CONTEXT** to answer the user's **QUESTION**.3. If the answer is not in the context, say: "I couldn't find the information for that specific order. To get the best help, please connect with a human agent." DO NOT invent an answer.4. Structure your final response as a JSON object with two keys: "answer_text" (your friendly response) and "status" (either "resolved" or "escalation_needed").

**CONTEXT:** {retrieved_order_details}**QUESTION:** {customer_query}

This refined prompt establishes a persona ("SproutBot"), gives step-by-step instructions, includes a negative constraint (what not to do), and specifies a structured output format (JSON). This makes the bot’s behavior predictable and its output machine-readable, allowing easy integration with support ticketing platforms. The business impact is a measurable drop in support tickets and a significant increase in first-contact resolution rates.

Example 2: RAG for Internal Document Summarization

A common enterprise use case for LLMs is summarizing internal knowledge bases like financial reports or technical wikis. A Retrieval-Augmented Generation (RAG) system is ideal for this, as it grounds the model’s answers in specific, retrieved information, which drastically reduces hallucinations.

The prompt is the glue that fuses the user's question with the relevant data retrieved by the system.

Alt text: Diagram illustrating the progression of AI prompting techniques: Zero-Shot, Few-Shot, and Chain of Thought.

This flow shows how you might start with a simple instruction and layer in more complexity, like examples (few-shot) or step-by-step reasoning (chain-of-thought), to help the model tackle tougher questions.

RAG Prompt Template for Financial Summaries

Prompt Template:

You are a financial analyst AI. Your task is to synthesize information from the provided text snippets to answer the user's question.

**Instructions:**1. Carefully review the following **DOCUMENT SNIPPETS** retrieved from our financial reports.2. Generate a concise, factual summary that directly answers the **USER QUESTION**.3. Cite the source for each key point using the format [Source: Document_ID].4. If the snippets do not contain enough information, state: "The provided documents do not contain sufficient information to answer this question."

**DOCUMENT SNIPPETS:**{retrieved_text_chunks_with_metadata}

**USER QUESTION:** {user_financial_query}

This prompt forces the model to stick to facts by using only provided sources, cite its claims for verification, and handle ambiguity gracefully. This disciplined process is essential for any production-ready AI system. As prompt engineering matures, related fields like Generative Engine Optimization (GEO) are emerging, highlighting the importance of well-structured, high-quality information. For more on how models interpret language patterns, our guide on what is natural language processing is a great resource.

Deep Dive: Trade-offs, Techniques, and Pitfalls

Once you move beyond basic prompts, you'll encounter challenges that require a more strategic approach. Building production-grade AI means anticipating failure modes and making smart trade-offs between speed, cost, and accuracy.

Core Prompting Techniques

Your goal is to provide just enough guidance to get the job done correctly every time, without room for misinterpretation.

- Zero-Shot Prompting (The Direct Command): Give a direct command without examples. Use for simple, well-defined tasks like summarization or translation where the model has strong built-in understanding. Business Impact: Excellent for rapid prototyping, keeping initial development costs low while quickly validating an idea.

- Few-Shot Prompting (Learning by Example): Provide 2–5 examples ("shots") of the desired input and output. Use when you need a specific output format (like JSON) or a consistent tone. Business Impact: Massively boosts the reliability of AI-driven workflows by ensuring consistent data structure, which prevents downstream errors and reduces manual cleanup.

- Chain-of-Thought (CoT) Prompting (Showing Your Work): Prompt the model to "think step by step" before giving a final answer. Use for multi-step math problems, logic puzzles, or any task requiring reasoning. Business Impact: Makes the AI's reasoning process auditable, allowing users and developers to verify logic and build trust in the outputs. It's how you avoid the "black box" problem.

Common Pitfalls and Advanced Strategies

Building production-grade AI systems requires a defensive mindset. Assume things will go wrong and engineer your prompts to be resilient.

- Prompt Injection: This security vulnerability occurs when a user input hijacks your model, making it ignore your instructions. Mitigate this by treating all user input as untrusted, adding instructional guardrails to your system prompt, and validating model outputs.

- Prompt Brittleness: A prompt perfected for one model version (e.g., GPT-4) may break with an update (e.g., GPT-4o). The only reliable solution is to build a robust evaluation suite ("unit tests" for prompts) to automatically benchmark performance and catch regressions before they affect users.

When to Fine-Tune Instead of Prompting

As prompts become overly complex, you face a strategic choice: continue adding instructions or fine-tune a model?

Prompt engineering is for controlling how a model behaves. Fine-tuning is for teaching a model what it needs to know by embedding deep domain expertise.

It's a classic trade-off:

For example, a tool analyzing legal contracts can start with a large prompt full of legal principles. But for more nuanced interpretations, it's more effective to fine-tune a model on thousands of annotated legal documents. This teaches the model to think like a lawyer, achieving accuracy that a prompt alone cannot.

Prompt Engineering Starter Checklist

This checklist provides a repeatable framework for creating production-ready prompts. Use it during sprint planning to ensure your team covers all critical steps from business alignment to performance measurement.

Alt text: A handwritten checklist on a yellow sticky note covering prompt engineering topics like business objective, output format, and metrics.

[Download the Prompt Engineering Starter Checklist (PDF)]

What to Do Next

- Audit Your Current Prompts: Use the checklist above to evaluate one of your existing AI features. Identify gaps in clarity, constraints, or error handling.

- Run a Small Test: Select a low-performing prompt and spend a few hours refining it using the "After" example as a model. Measure the change in output quality and consistency.

- Build Your Team's Capability: If your team is struggling to get reliable results from AI, it's time to bring in expert help. ThirstySprout connects you with pre-vetted AI and ML engineers who specialize in building production-grade systems.

Ready to build a team that can turn powerful AI models into reliable products? Start a pilot in just 2–4 weeks.

References and Further Reading

Hire from the Top 1% Talent Network

Ready to accelerate your hiring or scale your company with our top-tier technical talent? Let's chat.